Location Boston, MA

Interests Programming, Photography, Cooking, Game Development, Reading, Guitar, Running, Travel, Never having enough time...

Working On fusion energy at CFS

This Site Made with Astro, Cloudinary , and many many hours of writing, styling, editing, breaking things , fixing things, and hoping it all works out.

Education B.S. Computer Science, New Mexico Tech

Contact site at dillonshook dot com

Random Read another random indie blog on the interweb

Subscribe Get the inside scoop and $10 off any print!

Surprisingly Cheap and Powerful Server Hosting for Indie Devs in 2025

I started looking for a place to host Cat Search and of course instead of doing things the easy way and just deploying it to one place I had to do things the hard way and test all my options to see what the best option for me was.

My goals for hosting are:

- Avoid vendor lock in

- Get the best bang for the buck

- Stay under or around $15 per month

- Meet performance requirements (looking for at least sub 100ms response times under load)

- Have relatively easy ways to manage and monitor the servers

- Automatic deploys on push

- Database backups

- Ability to scale up and out in the future

- A good user experience

There are of course over 9,000 ways to host a site and API these days but I’m looking to keep it as simple as possible to start with and try to avoid as much custom work as possible to be able to focus on the product, but all while keeping costs low to try to make this a profitable venture as soon as possible.

The architecture of the backend I’m deploying is quite simple, here it is:

Can’t get much simpler than that!

Contenders

I have a bit of experience with the big three (AWS, GCP, and Azure) hosting providers so I wanted give them a shot but I’m certainly am not an expert in any of them to bias my decision one way or the other. During my initial search for options I also found this article on VM Performance / Price that proved helpful in finding new options. Since it was focused on synthetic benchmarks and not hosting an API and database I still wanted to continue with my test to see what kind of actual performance I would get.

Here’s the full list of hosts I came up with to try

The Traditional VPS Hosts

There were a number of other hosting platforms I looked into that got disqualified for one reason or another. Many made you pay for the servers all up front, and only had yearly deals which wasn’t going to work for this test. Others just looked straight up predatory and not a business I’d want to know my credit card number.

During my research I also found Cloud 66. This is not a hosting platform but actually a product that manages deploying your apps and databases to cloud providers or your own VPS. It also can manage database backups, autoscaling, and monitoring which sounds great as a uniform way to manage servers and avoid vendor lock in. I’d also be remiss if I didn’t mention Caprover which I contributed to last year and use to host this site. It’s similar to Cloud 66 in that it manages docker app deploys, gives you monitoring and a UI, but doesn’t have database backup capability and is much more DIY than Cloud 66. So for this usecase I chose not to use it.

Benchmark Setup

To consistently test each host I set up an Artillery load test that hits the site categorization API. Each request picks 15 random URL’s from a list of 350 to have the API categorize. The API is mostly read heavy for this operation with a couple queries per request, but also does a few writes to separate tables.

Before each test I seeded the new database with around 21 thousand rows of categorized URLs to have the queries return meaningful data.

For the load test itself I setup Artillery to run in phases of 10 seconds each, starting at 5 requests per second (RPS), then going to 10, 50, 100, and 500.

Results

For each host I documented my setup and deployment experience as well as any bugs or documentation problems that I hit along the way. These of course shouldn’t be deal breakers but are good to know for your own user experience, especially if you’re using the host for the first time.

Render

As my first deploy target there were a few bumps that required code updates to fix a database pool connection issues and accept a connection string environment variable, but once I sorted that out the code I deployed was exactly the same to all the hosts.

The first instance I created was in Ohio US East with the free tier which gives you such an overwhelmingly generous 0.1 CPU and 256MB RAM with 1GB Storage. The basics instance went up pretty quickly but it took quite a few minutes before the deploy started cloning the repo. Probably shouldn’t expect much from the free tier though.

It took me a few minutes to figure out how to connect to the Postgres DB from my local machine to run the seeding script (turned out to be the hostname not including the remote url which I had to dig for) but the deploy and mostly worked first time. A miracle in devops history indeed. The only problem was a bug in my code for another place not using the env var for the connection string.

On the free tier though your app shuts down all the time after a minute of inactivity. After this happened I tried using the restart button but that didn’t do anything, so I had to run a full deploy to get it responding again.

Pretty poor results if I do say so myself. But let’s upgrade to the paid tier to give them a fair shake.

Even getting a 0.5 CPU 1gb RAM instance is $19/mo which is above the budget threshold so I went with their most basic paid plan to retest. The DB is $6/mo for 256MB RAM and 0.1 CPU (lol is this 2005?) and the “App Starter” is $7/mo for 512MB RAM and 0.5 CPU.

Better, but really not that good.

Digital Ocean

For DO I exceeded the budget by a bit to be able to use their fully managed PostgreSQL offering that starts at $15 a month and then their app platform which starts at $5 per month for 1 shared vCPU, 512MB RAM, and 50GB bandwidth hosted in their New York data center.

First impressions were good with an easy setup for both the DB and app but it took a little while to sort out a few configuration issues with the health check using the wrong port, the database connection pool settings, and environment variables for the DB connection. After the first couple times editing the app config to change an environment variable it’s a bit annoying the whole app has to rebuild and redeploy which takes a couple minutes when it could just be a container restart taking seconds.

As I was running the test it’s nice to see they have built in load charts, query stats, and DB logs. During peak load the app still didn’t even come close to maxing out CPU usage or memory in app or DB which makes me think connections are the bottleneck. That will have to be a separate investigation though since I’m trying to keep everything as similar as possible between tests.

Linode

I was going to run the test it on my existing tiny server that runs this site but then thought that wouldn’t be a fair comparison so I created a new server for the test.

To stay on budget the instance had to be 1 shared CPU, 2gb RAM for $12/mo US East, Newark NJ

AWS

AWS is a behemoth of complexity but since I was just using one VPS with Cloud 66 handling the hard parts of deploys it only took less than an hour to figure out IAM permissions and credentials for Cloud 66 to use and which instance type is at the right price point.

I landed on trying a t3.small on us-east-1 (N. Virginia) which has 2 vCPUs, 2GB RAM and should be ~$15/mo.

I wanted to compare the single VPS solution to “the amazon way” using RDS (and try out Aurora) and Fargate with Elastic Container Service (ECS) for the app but playing with the AWS pricing calculator quickly proved that to be a non-starter.

The smallest RDS instance for just PostgreSQL is the db.m1.small machine instance and that’ll run you $43.80/mo by itself for a single AZ. Then tack on $2.30/mo for the minimum 20gb storage and $1.90/mo to backup the 20gb. With PostgreSQL compatible Aurora the smallest instance is db.r4.large which is $211.70/mo plus DB storage prices that vary wildly on baseline IO rates which I have no idea what to expect. The good news is that it’s only $0.42/mo for the same 20gb backup!

With Aurora Serverless the minimum you can do is 0.5 “Aurora Capacity Units (ACUs)” per hour which are described as “Each ACU is a combination of approximately 2 gibibytes (GiB) of memory, corresponding CPU, and networking”. For what sounds like a tiny amount of power will still run you $43.80/mo with the same DB storage and backup prices as above.

If you actually want to run an app as well that’ll be extra! Calculating the cost for Fargate with 50% utilization, 2vCPU 8GB RAM for just one container came out to $42.53/mo.

I guess the “the Amazon way” is not as cheap as I thought after all…

GCP

A first glance at a dedicated Postgres DB in the calculator shows it’s well outside my price range so I’ll once again sift through the plethora of VM options.

The best equivalent instance I found is an e2-small with 2 vCPU and 2 GiB RAM for $14/mo. Choosing us-east4 in N. Virginia to be a comparable location for the latency.

The signup experience had a new wrinkle to it that no other provider made me do. You have to verify your credit card by waiting for pending charges to show up on the statement and then enter the amounts. After 15 minutes of refreshing my statement I gave up and went and did something else useful. Only after coming back 45 minutes later the charge showed up and I could proceed with waiting another 25 minutes for the app to deploy (the longest of any provider by far).

From Compute Engine API page clicking manage just sends me back to same page

Welp, that was disappointing

Azure

Once again from poking around the Pricing Calculator, dedicated DB instances are way too expensive and cryptically named Dadsv5 - Series which means absolutely nothing unless you have the decoder ring. No surprise the list of virtual machines is nuts but narrowing down by price once again I land on the B1ms instance with 1 core, 2GB RAM for $15/mo deployed to US East in Virginia.

I have used Azure a bit before so I know a bit of what to expect but just trying to follow the instructions to set up credentials for Cloud 66 to use was like trying to escape a corn maze. Why are there two different admin dashboards? https://entra.microsoft.com vs https://portal.azure.com Why don’t the tabs look like tabs? When I tried to add a role to a subscription for an app following the instructions I couldn’t find the “Contributor” role because it was hidden behind another tab “Privileged administrator roles” instead of “Job function roles” that just looked like a sub-heading.

The Cloud 66 docs are light on this process and the first couple deploys failed with these errors on which “namespaces” the subscriptions need.

An exception occurred in your cloud during server creation: Can't create a default Virtual Network in Azure cloud: The Azure API responded with HTTP error 409 and error code(s) MissingSubscriptionRegistration: The subscription is not registered to use namespace 'Microsoft.Network'. See https://aka.ms/rps-not-found for how to register subscriptions.. If the problem persists please contact support.. If the problem persists please contact support. masterIt’s also annoying that Cloud 66 has to create a new app (even with copied vars) to retry initial deployment after it fails. It claims it will clone all the variables of the app deployment but actually it forgets the deployment target, machine type, and DB each time. Finally got it working though for the first successful deploy taking 13 minutes.

Hetzner

After all that messing around with the big cloud providers the signup and setup process for Hetzner was a breath of fresh air. All Cloud 66 needed was creating an API key that took a few clicks.

There was a couple different options at my price point in their Ashburn Virginia data center so I wanted to try out both the dedicated and shared CPU instances.

First deploy was with the dedicated 2 vCPU, 8GB RAM, 80GB storage for $15/mo which first deployed in 8 minutes.

Then I tried their shared 3 vCPU, 4GB RAM, 80GB storage instance for $11/mo.

DNF

All of the following providers I tried but wasn’t able to get them working for one reason or another. Some of them I’m sure I could get working if I spent more time on it but I disqualified them for myself for being a time suck.

Supabase

First impression creating a new app: “Network error trying to create new resource”. Second impression: “Project with name X already exists in your organization”.

After actually creating the DB it took a bit of searching around how to find the connection string to use to directly connect to the db for seeding.

AAannnd then I realized you can’t actually deploy a full containerized app, only edge functions which defeats the point of hosting your api close to your db for me.

Heroku

I did use Heroku like 10 years ago and surprisingly my password manager has the login saved. But of course trying to use it prompted the error “There was a problem with your login”. Went through the forgot password flow but never got an email, so time to sign up again I guess. There’s an annoying signup questionnaire and mandatory 2FA right out of the gate which slows down the whole process.

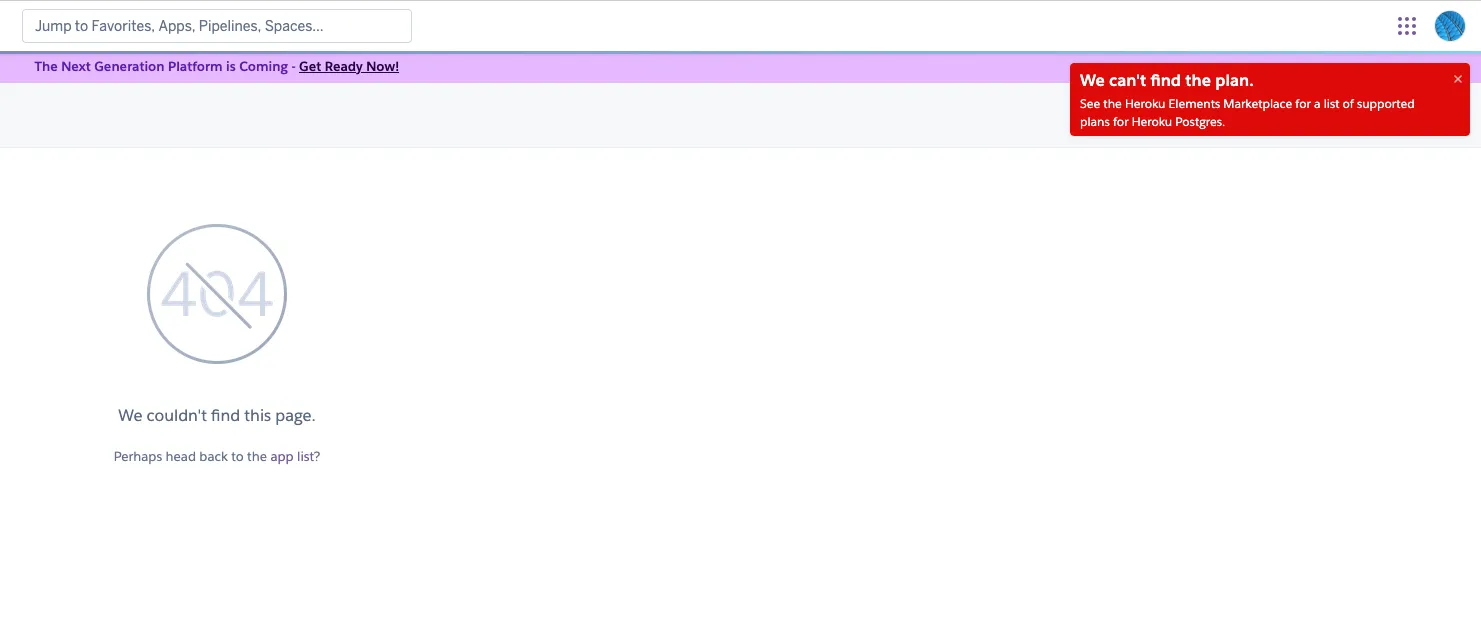

Looking through all “essential” DB plans they all seem to be same except for storage limit, and are listed for $5/mo with “0 Bytes RAM, 1GB storage”. Pretty sure there’s a mistake in there but I give it a shot anyways and run straight into a 404 page with a error popup.

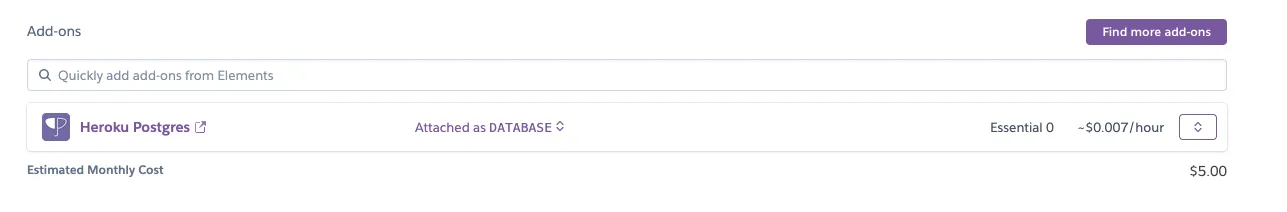

I guess they don’t offer that one anymore and I’ll have to use the $50/month plan with 4GB RAM, 64GB storage, and 120 connections instead? Nope that one doesn’t work either… I had to look up a youtube tutorial to find out you have to create an app first and then attach postgres as an add on… Ok I guess I’ll do that even though it seems backwards to me. I create the add on and then go click the “Heroku Postgres” link to find out the credentials for seeding and it does ** exactly what I expect ** … and opens the favicon in a new tab https://addons-sso.heroku.com/favicon.ico

Going back to the page and trying agin it works, I guess their JS was just slow or broken? On the next page I’m amused to see they actually just use Amazon RDS under the hood.

The next step is to try to figure out how to deploy the app. Heroku apparently can’t figure out there’s a docker file, from docs I found looks like you have to set up a heroku yml file. Tried a version of that which didn’t work, then see you should use this build pack thing.

The article doesn’t really say how to use them in your project and there’s two different flavors of build packs but the descriptions are gobbltygook if you don’t already know what they are.

That all seems like quite the hassle for this test so I switch to just use the container registry method. But come to find out there’s no way to do that in the UI, you have to install the heroku CLI, sigh…

I go through their instructions for pushing a container but of course it fails at

heroku container:push cat-search-api

› Error: The following error occurred:

› Missing required flag app

› See more help with --helpAdded the --app parameter that’s missing in all their examples and then got another error:

heroku container:push --app=cat-search web

› Error: This command is for Docker apps only. Switch stacks by running heroku stack:set container. Or, to deploy ⬢ cat-search with heroku-24, run git push heroku main instead.Did that and now it rebuilds the docker image even though nothings changed, and I think it deploys, but there wasn’t anywhere to specify the environment variables during this process. So you have to switch back to the UI and add them in “Config Vars”.

Finally open up the deployed app url and of course get an app error. Turns out the app inside the container can’t bind to port 8080. Mess around with thing some more and try both port 80 and 443. No luck…

And this is where my Heroku story ends: 2 hours after it started with nothing to show for it except the experience to know I’ll never use Heroku again.

Neon

Should have found this in the initial search but found out it doesn’t support deploying an app alongside the DB.

Vercel

Also should have found earlier that Vercel doesn’t support docker deploys. This one did surprise me a bit since I know they let you deploy edge functions to run Next.js apps and I thought they were angling to be a more comprehensive hosting platform.

Oracle

To be honest, before reading the cloud provider performance article I didn’t even know what Oracle really did anymore other than sell database lock in. But apparently they have good performance to price ratio so lets give them a shot and see.

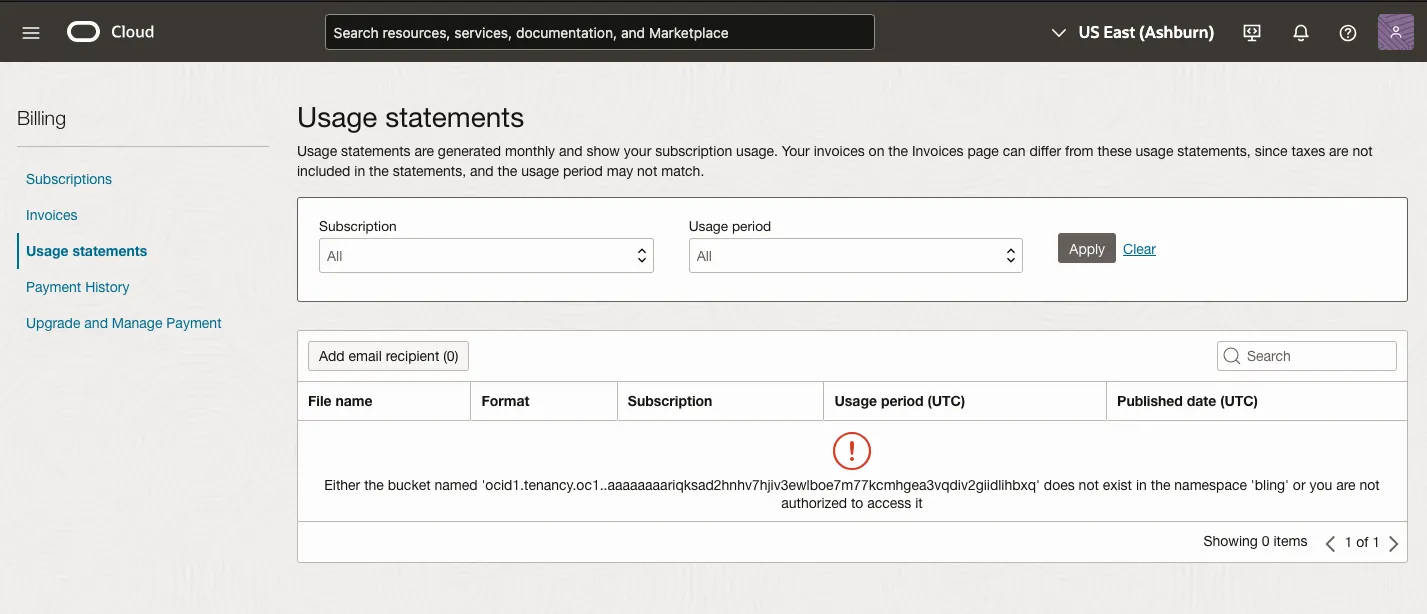

After signing up I go to create a VM instance that’s in the price ballpark and go for the Compute - Standard - E5 - Flex AMD instance with 2 OCPU and 8GB RAM running Ubuntu 24 but I’m not really sure on the price when selecting it since it doesn’t list it. After it’s up I go to the Billing Screen to see if it lists an estimated cost, but that page has an error.

I had to go to the public Cost Estimator to see it’s actually $56/mo. Outside my budget but lets try it anyways, so I get it registered in Cloud 66 as a custom server.

The first deployment fails after 19 minutes with the error “system services failed to start”. Searching around a bit for things to try I find to run this command to get Kubernetes logs.

journalctl -u kubelet

Mar 14 02:03:33 master kubelet[23739]: I0314 02:03:33.537146 23739 scope.go:117] "RemoveContainer" containerID="93a9c646c1dab98ef4c0707f35b4d95d96dd10612081a6fe22fc43523b9c1df3"

Mar 14 02:03:33 master kubelet[23739]: E0314 02:03:33.537283 23739 pod_workers.go:1301] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"k8s-event-relay\" with CrashLoopBackOff: \"back-off 5m0s restarting failed container=k8s-event-relay pod=k8s-event-relay-6885c57f84-ph7rc_kube-system(ef57>Not sure where to go from here I try a different instance with 1 OCPU, 8GB RAM. Same problem. One more time, this time with Ubuntu 22, on the 1 OCPU, 8GB RAM. Failed again and since these were all fresh machines with the Ubuntu versions Cloud 66 supports I don’t particularly feel like spending more time messing with it and give up. I’m hesitant to blame Cloud 66 though since the custom server setup worked flawlessly for Linode.

Combined Results

For all the hosts I was able to deploy to here’s the combined view with their p90 times across the different load rates. In the cases where the load numbers didn’t fall exactly along the delineations I linearly interpolated to get a more accurate result.

I also wanted to see performance compared to the monthly cost in some way so the best metric I could come up with is taking the ratio RPS / Latency / Monthly Cost from the p90 values that are shown above. This ratio will give greater values to the hosts that can deliver more requests at lower latencies for a lower cost.

So using AWS as an example: 50 RPS / 569ms latency / $15 a month = 0.0058 which you can see rounded to 0.006 on its blue bar below.

Conclusion

Suffice to say I’ll be using Hetzner for both the ease of setup and best all around performance per dollar. Surprising to me is Azure coming in second for hosts on the performance per dollar chart however the very complex management console and altered vocabulary for everything is not for those looking to avoid complexity. Also surprising to me is how poorly all the new generation of hosting platforms faired. All but one was a nonstarter and the only one I did deploy on had awful performance for the price.

Hopefully this info will help you in your search for hosting!

Want the inside scoop?

Sign up and be the first to see new posts

No spam, just the inside scoop and $10 off any photo print!