Location Boston, MA

Interests Programming, Photography, Cooking, Game Development, Reading, Guitar, Running, Travel, Never having enough time...

Working On fusion energy at CFS

This Site Made with Astro, Cloudinary , and many many hours of writing, styling, editing, breaking things , fixing things, and hoping it all works out.

Education B.S. Computer Science, New Mexico Tech

Contact site at dillonshook dot com

Random Read another random indie blog on the interweb

Subscribe Get the inside scoop and $10 off any print!

Tradeoffs in Testing

I’m finally getting around to putting down in words the testing philosophy I’ve developed over the years. Some of it might rock the boat a bit, other parts may be obvious to some folks, but what I’d like you most to take away is a reflection on your own testing philosophy and the tradeoffs your making. There’s no free lunch.

Choosing how much time to invest in testing is all about balancing how confidently you can say your program is doing what you intend to and how fast you can modify or add to your program. Writing tests takes time that could otherwise be spent adding new features. When requirements change in a project with tons of tests, tests will break and that means spending time debugging and evaluating what should change in the tests as well. The reason to write tests though of course is to save time from manually verifying everything still works after changing the program.

So the testing approach that strikes the right balance of value for time spent is extremely dependent on the type of project you’re working on. It really irks me to read articles on testing that bake in lots of assumptions about the project, team size, product needs, etc. Their conclusions could be totally wrong for your situation! The obvious example is that you need way more tests for an MRI machine than for your weekend web app side project.

In general I think these are the four big areas that determine what your test coverage should look like.

Lets break down each one:

When the team size is small each developer knows a greater percentage of the codebase and is less likely to inadvertently break something because they didn’t know about it. As the team size increases, developers tend to focus on smaller parts of the codebase so having increased test coverage can catch bugs when cross cutting changes are made that affect parts of the system that the author has no awareness of.

Product maturity is all about how big your product is and how often the requirements are changing. In a mature product the requirements and tests will be changing less frequently, therefore having a longer time that they can catch issues “for free”, therefore better value for the time it took to make them. They also help everyone remember all the different features of the product that are buried away and all the people that built them have since left. As the product becomes so mature that it’s put into maintenance mode, you hit a point of diminishing returns where adding tests becomes pointless since nothing new is changing to break it. On the opposite side of the spectrum when you’re working on a new greenfield project, requirements are being figured out and are changing constantly, lots of code is getting thrown away, and the tests you write will soon become obsolete so it’s also not a great time investment.

When you’re writing a package, library, or something else that’s low in the stack you’re going to have a lot of Dependent code. When you use other peoples code yourself and it breaks you get sad. Don’t make people sad by not testing your code that other people depend on.

Cost of failure is the big one here that I think warrants an exponential growth in the test coverage. This is the heart of why the MRI machine in the previous example needs way more tests than the side project. The cost of failure in the side project is just your own time, in the MRI it’s potentially someones life and a multi-million dollar machine. The cost of failure is somewhat intertwined in the other metrics but there are large products with lots of people working on them that still have a way lower cost of failure than something like a mars rover.

So how do all these factors combine together? My mental model is to just average them all together. So for example when I built Macrocosm it was a solo project where requirements were changing constantly (low product maturity), there was no dependent code, and a low cost of failure. All these together tell me that very low test coverage is appropriate, and that’s what I had. Could I have caught bugs with more tests? Yes. Would it have been worth it for the amount of time it would have taken to write and maintain them? No.

Another arbitrary example might be if you’re on a small team, working on a legacy product, with little to no dependent code but that still brings in a ton of money for the business. You’ll have to pick the arbitrary numbers on the scale for yourself, but to me this warrants a moderately high amount of testing mainly driven by the cost of failure factor, but tempered by the small team and no dependencies.

With a rough idea of how much to test, lets talk about some goals for actually writing tests

Testing goals

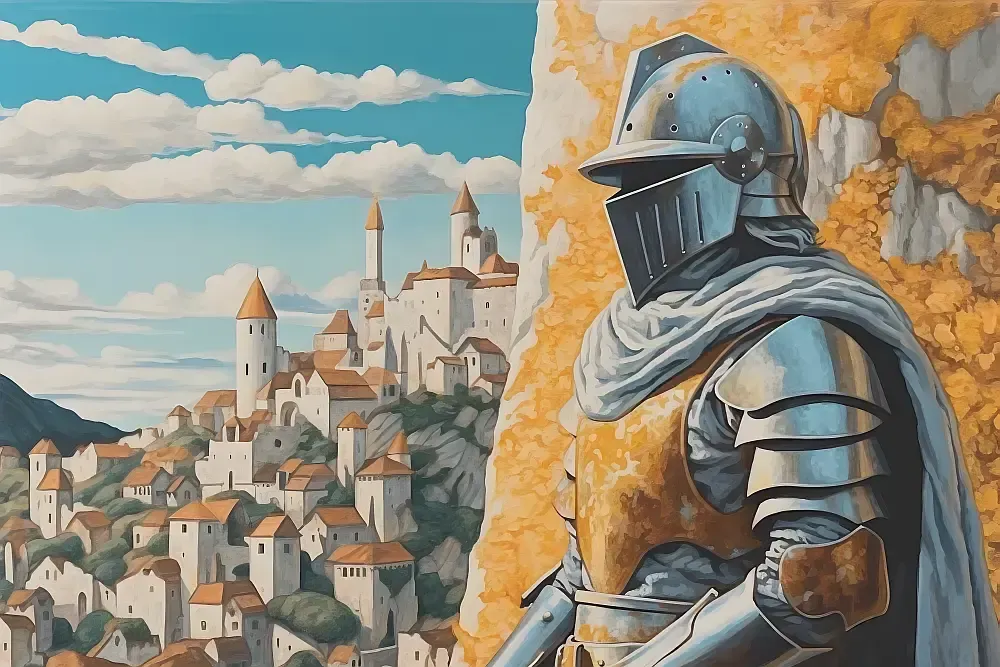

The overarching goal of your test suite should be to build your testing suit of armor piece by piece where each test only overlaps with other tests to ensure there aren’t any gaps. You don’t want each of your tests asserting the same thing over and over (like testing authentication for each route in a web api). That just adds bulk and slows you down when requirements change.

Your knight starts out with no testing armor. They’re quick on their feet and can dance around other fully clad knights, but they’re vulnerable. When it comes time to add some protection think about what’s most vulnerable and focus on that first. What’s the most vulnerable part of your application? Really stop and think about what the most likely scenarios are for bugs and figure out how to write a test to catch them. If the scenarios are improbable think twice about if you really need a test. For example, I’ve come across a number of tests for simple React components where all they do is mount the component and assert it rendered. There wasn’t anything complicated the components were doing that was a likely scenario to break, so we decided to shed some weight.

For this reason I think code coverage percentage is generally a dumb metric to strive for in all but the highest testing need projects. What’s the value in testing all the code in your project that is so straightforward that the only way it could break is if it was intentionally changed for new requirements? Ideally you’re striving for the first part of the mantra “Either your code is so simple that there are obviously no bugs, or your code is so complex that there are no obvious bugs”. From what I’ve seen in projects with arbitrary test coverage goals, people spend more time gaming the coverage metric than the time saved coming up with useful tests that catch bugs.

Back to the armor, your tests should also come in layers. Under the plate armor there’s a padded gambeson which provides more protection and softens blows at a fraction of the weight. By architecting your code in layers where each layer has a single responsibility it makes testing so much easier. For example in a typical web api backend, if your code has different layers to handle data fetching/persistence, business logic, and presentation transformations, each layer can test its own responsibilities without worrying about the rest. Thinking about how to structure your code so it’s easier to test. This often means isolating complex business logic in a pure function that can be rigourously tested. Depending where you are on the test coverage scale, you might determine tests for the complex business logic layer are sufficient and everything else is straightforward enough.

You might ask though, how can each layer not worry about the rest if each layer depends on other layers? This is where Dependency Injection really shines. You can build out a suite of reusable fake implementations to inject into the layer you’re testing against. Building out full testing implementations of a layer may take longer initially but the time should be well spent when you can reuse them across all your tests, and have a single place to update when the layer behavior changes. Contrast that with the Jest mocking approach that monkey patches dependencies in each test and are nearly guaranteed to break across many tests when the layer behavior changes.

Another way to think about it is to think of your test suite as its own standalone application. It’s “just” another program, which happens to have the requirements of verifying what another program does. Don’t throw out all your best practices and architecture for writing a good app when it comes to the test suite! I’ve seen test suites where there’s tons of boilerplate setup code repeated everywhere and lots of inconsistencies between tests. The test suite has to be maintained just like the application.

Ideally you want your tests as decoupled from the implementation as possible so it is easier to maintain. You want to be able to assert on just the important logic (why your application exists in the first place) and have the tests remain the same as you refactor the implementation so you’re sure it behaves the same. This of course is more feasible the higher up the testing pyramid you go, but still important in unit tests as well. Test the interfaces, not the implementation details.

If the dev writing the code is also writing the tests, they have certain blind spots, assumptions, and probably a bit of laziness (*cough* guilty as charged) about how the feature will be used and what scenario to test. Automated testing can get you far, but it’s still not a complete substitute for real users prodding it. As an exercise, take the time to try and break your own code when you write it. Throw lots of data at it. Throw some naughty data at it. Fuzz it. Try it in a different environment (hardware/OS/browser/etc). Get annoyed at it when it takes too long. I can almost guarantee you’ll find something you didn’t think of in the routine testing motions.

The best time to find bugs is right after you wrote the code while everything is still fresh in your head. John Carmack talks here about running code with the debugger right after you write it to verify it’s working like you expect it does. I’m similarly amazed that more developers don’t do this since it’s not only such a good way to catch bugs as soon as possible, but also a great way for Upgrading your Brain Virtual Machine. This is harder to do in environments where there’s lots of async and framework code, but it’s still worth doing when writing complex functions and will give you a better understanding of what all is going on.

Wrapping up

Hopefully reading this has sparked some introspection on your own testing philosophy. I didn’t want to rehash specific testing methodologies that are well covered by other articles but instead get people thinking about the cost benefit analysis of the methods as applied to their situation. Don’t blindly follow advice from people. Especially me

Want the inside scoop?

Sign up and be the first to see new posts

No spam, just the inside scoop and $10 off any photo print!